There is growing interest in multimodal, physiological data collection for the purposes of understanding decision-making, cognitive performance and designing brain computer interfaces.

Part 1: Mainly on EEG artifacts

summary of data sources

The source of EEG activity is post-synaptic activity of cortical pyramidal cells, and glial cell activity measured at the scalp. fMRI is derived from the measurement of blood oxygen changes in different regions of the brain. Eye tracking is the primary tool for measuring pupillometry and gaze.

where each excel

Eye tracking excels in paradigms where:

- knowing if stimuli was seen,

- modeling decision-making (i.e. behavior before events of interest) is a priority,

EEG excels where:

- temporal resolution of brain activity is important,

- measuring reactivity to stimuli or events of interest is necessary,

- interacting with an interface using brain activity.

fMRI excels where:

- spatial resolution in the context of an task is important,

- connectivity of brain regions and how it plays a role in decision-making is important.

artifact removal (as a function of adding on data sources)

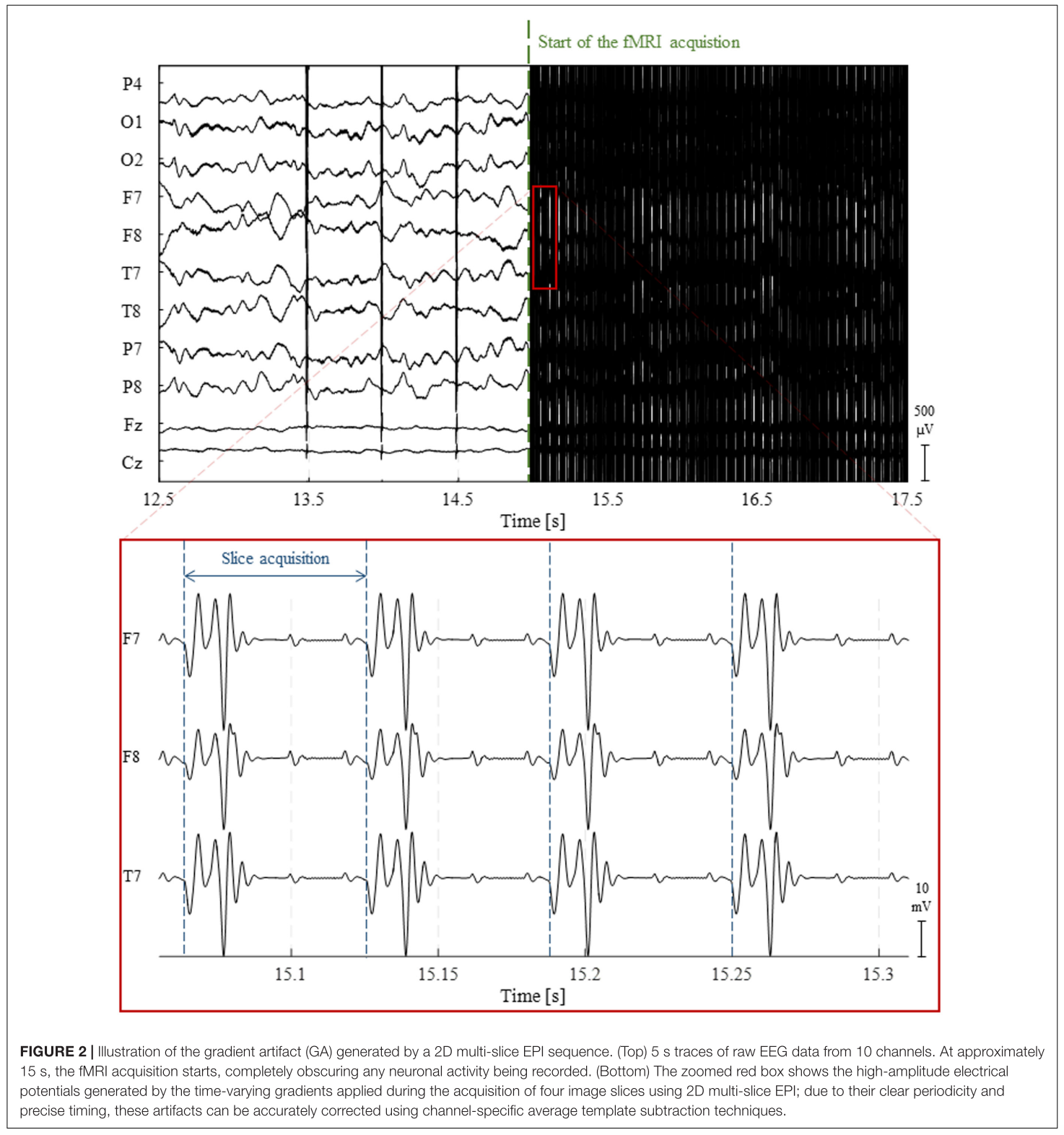

EEG appears to be the type of data that is, not only most prone to signal variations by itself, most effected from adding on other sources. For example, gradient artifacts that result from the fMRI scanner's magnetic field overwhelm the raw EEG signals in amplitude to the point where you cannot use the same EEG pipelines as you would in unimodal data collection. Abreu et al's review is a great resource for a summary of recent (but notably, non-deep learning) methods in removing the effects of gradient artifacts from EEG 1.

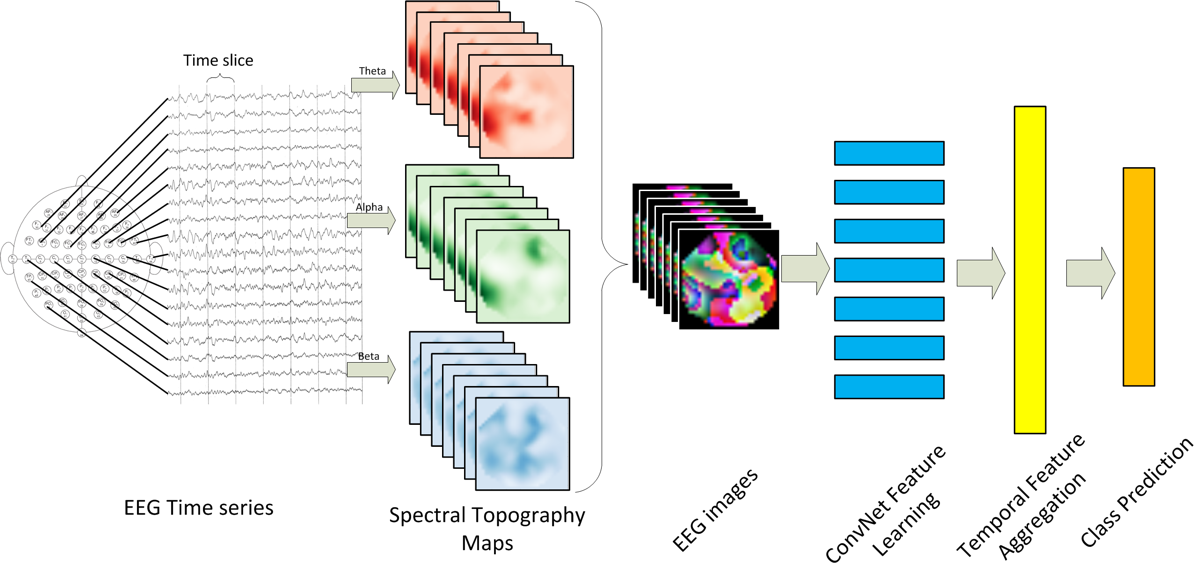

I couldn't help but feel lost in the signal processing background literature, but it was interesting to take a step back to read a study where artifacts and noise filtering in EEG, for the most part, wasn't really needed. It was a part of a poster presentation by Tevis Gher at UNL, and it highlights the potential in using deep learning techniques to avoid tedious signal processing pipelines, but only for classification problems.

The task is a discriminatory one, where a system classifies whether an EEG waveform derived from an individual who is visualizing a familiar vs. unfamiliar motor-based task. In this case, it's not really important to filter noise, artifacts, etc. A convolutional system is capable of ignoring variations in the signal that are not relevant to the task at hand. In some cases, the results used in the last stage (2D visualizations below) probably, meaningfully, use artifacts that may be problematic in other contexts. For example, filtering blinking may be preferred in most EEG contexts, but it may not be an “artifact” in a classification task such as this, if participants tend to blink more when imagining themselves doing a familiar task.

In fact, image denoising has a practical use in computer vision, but (from an initial look) I did not see too much use of deep learning techniques for this purpose in cognition.